IP Camera Protocols And Standards Explained

Delving into the technical details of how our IP cameras do their thing may not seem necessary to most people, but as smart home power users we aren’t most people. DIY security camera brands are usually closed systems designed to be easy to install and use by the layperson. That’s fine unless you want to start diversifying your camera choices or ditching cloud-based recording.

You’ll rapidly find yourself dealing with a bunch of technical terms relating to how the cameras talk to recording system and video players. This becomes even more so if you want to use third party integration tools to bridge the gap between various camera brands and smart home platforms.

When I started trying to understand all these protocols and standards it was actually pretty confusing to make sense of what, exactly, everything does and how the pieces relate to each other. My aim here is to make that easier for others by laying out all the various terms you may come across when messing with cameras and network recording systems.

There are a few different buckets we’ll look at:

Security System Specifications (Jump) - These are industry agreed definitions of how various security system components will talk to each other. What protocols they will use, data formats, transport methods and so forth. They’re high-level agreements for how things should work together like technical standards but are not necessarily ratified as such.

Streaming and Control Protocols (Jump) - These are (usually) standards for specific data formats to be sent over the network that enable various data transfer and control actions to take place. Protocols like these are the basis of all network communications that allow devices to understand each other, kind of like languages, and are often layered on top of each other as the actions get more specific.

Video and Audio Codecs (Jump) - To reduce the size of data for efficient transport and storage is almost always encoded into some data format that effectively compresses it, hopefully with minimal quality loss. These formats are called codecs (COder/DECoder). There are many of these with different pros and cons, some open standards and some proprietary.

These pieces will be combined to permit video and audio to be sent and received, used in various combinations depending on circumstances.

Security system specifications

CCTV and building security systems were pretty much all proprietary technology with tight vendor lock-in in the past. In 2008 two different groups of companies formed alliances to try to standardize the interfaces between the various types of equipment. This resulted in two different industry specifications that have continued to the present.

ONVIF and PSIA both deal with the wider landscape of building security systems, not just cameras. This includes things like intercoms, access control readers, door locks, sensors, and so forth. More than just defining streaming specifications for camera interoperability they provide for device discovery and configuration services as well.

Open Network Video Interface Forum (ONVIF)

ONVIF has become the more widely deployed specification and de-facto standard for IP-based security systems, and is the one you’ll likely encounter when building a DIY camera system. This is because ONVIF is built on open web standards like XML for data definition and SOAP for message transport.

ONVIF also has a larger manufacturer membership which results in a wide proliferation of ONVIF compatible components. Those components get picked up by many non-member manufacturers to build ‘ONVIF-comptaible’ products, but this can be a trap for the unwary.

There is a formal certification process to ensure ONVIF devices will work together properly, and these off-brand devices may not be properly implemented. Thankfully you can confirm if a product you are looking at is genuine by using the Forum’s conformance checker to see if it’s been certified.

The ONVIF specification is based on software profiles that can be added to devices as necessary. These profiles encapsulate different functionality covering the various aspects of security systems. For IP cameras you’ll typically see G, M, S, and T.

A - Access control systems

C - Door control

D - Access control peripherals

G - Edge storage and retrieval (video)

M - Metadata analytics (facial recognition, license plate scanning, streaming control)

S - Basic video streaming

T - Advanced video streaming (H.264/H.265, bi-directional audio, motion/tampering events)

While the overall specification continues to evolve with different version numbers, these profile definitions are guaranteed to be backwards compatible so older versions won’t be cut off. This provides additional stability, interoperability and confidence for manufacturers.

Physical Security Interoperability Alliance (PSIA)

This smaller group tends to be more focused on professional grade equipment and larger systems integrators. Built on a common web REST architecture it uses a variety of discovery methods such as Bonjour, uPnP, and Zeroconf. Because of this it falls short of ONVIF on interoperability because different PSIA devices can use incompatible methods of finding each other.

Being a more closed specification, and less useful for consumers, it has seen slower adoption and is unlikely to be seen on consumer devices unless they support both.

IP Camera Streaming protocols

This is where things can get a bit muddy. It would be nice if the various protocols used for streaming devices had clearly demarcated roles, but that isn’t the case. There is overlap and duplication at play, which is why it can be hard to get a clear view on what does what.

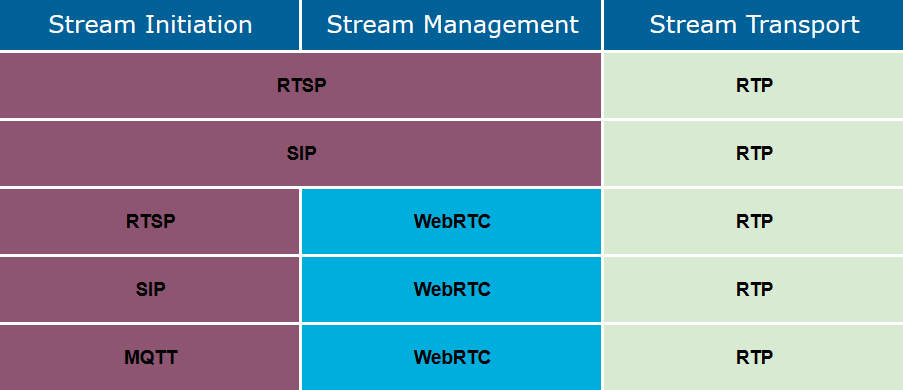

In a nutshell we have a few different roles to cover when we want to stream data from one device to another:

Stream Initiation - Also known as Session Initiation, this means finding the other party and establishing a connection that we can use for the stream and managing that session over its lifetime.

Stream Management - This relates to negotiating the parameters for the media stream to be used between the two end points.

Stream Transport - This is the actual data of the audio and video to be transferred and ensuring it gets where it needs to go.

The protocols used will depend on the situation and developer preferences, for example if the setup is client/server or peer-to-peer.

Streaming Protocols - Quick Overview

Real Time Streaming Protocol (RTSP)

This is the grandfather of streaming protocols, first proposed in 1996 in the early days of the World Wide Web and now defined in the IETF RFC 7826. Ironically it has still not been approved as an official standard. Nonetheless it is the protocol defined for streaming data in both the ONVIF and PSIA specifications.

RTSP is a text-based message protocol similar to HTTP, but unlike HTTP it is stateful in that it maintains a TCP session identifier to track concurrent stream delivery. It’s more suited to client/server type scenarios such as IP camera to NVR installations. These are a pre-defined set of senders and a single known receiver.

As it deals with the messaging and management aspects of the session, it doesn’t carry the actual stream data and relies on another protocol for that aspect, known as data transport. It also has no provision for encryption of the session data.

You can think of these kinds of control protocols as a remote control with the ability to send commands to a sender to initiate, start or stop a stream. For example a PLAY request looks like this:

PLAY rtsp://example.com/media.mp4 RTSP/1.0 CSeq: 4 Range: npt=5-20 Session: 12345678

Note that due to the coupled nature of these device relationships, RTSP is able to handle both initiation and management of the session. That also means that if the stream is to be played on an unsupported device, like a computer or smartphone, the stream will often be ‘rebroadcast’ using another method by the RTSP client.

Session Initiation Protocol (SIP)

This is another text-based control protocol that became prominent along with the rise in VoIP calling. It can be considered a competitor to RTSP in some respects as they perform similar roles, but it’s focus is on peer-to-peer connections. For this reason, it’s common to see it used for cloud-based consumer cameras like those from Ring where ad-hoc streaming connections are established between arbitrary devices.

As part of setting up a session, the clients, known as user-agents, use another protocol called SDP (Session Description Protocol). This is kind of like a business card which contains a simple text definition of the addressing information and available protocols that pertain to that client.

SIP was developed by the internet community and is actually a ratified standard, first defined in RFC2543 in 1999. Like RTSP it deals mostly with establishing multi-party data sessions for voice and video streaming and doesn’t actually carry the streaming data itself. It does, however, have some encryption capability having been designed for use over the internet. This is provided by TLS in the same way HTTP traffic is encrypted between server and client.

SIP requests use a format similar to HTTP, but instead of commands like GET and POST, you have things like INVITE, UPDATE, and BYE. Also like HTTP these requests will generate various 3-digit response codes to inform the other end of result of the request.

SIP Invite Example

INVITE sip:Manager@stations2.work.com SIP/2.0 From: Daniel<sip:Collins@station1.work.com>;tag=abcd1234 To: Boss<sip:Manager@station2.work.com> CSeq:1 INVITE Content-Length: 213 Content-Type: applications/sdp Content-Disposition: session v=0 o=collins 123456 001 IN IP4 station1.work.com s= c=IN IP4 station1.work.com t=0 0 m=audio 4444 RTP/AVP 2 4 15 a=rtpmap 2 G726-32/8000 a=rtpmap 4 G723/8000 a=ftpmap 15 G728/8000 m=video 6666 RTP/AVP 34 a=rtpmap 34 H263/9000/2

Message Queue Telemtry Transport (MQTT)

This is a bit of a side-step, but one we need to include for its use in certain streaming scenarios. First developed in 1999, It’s a light-weight messaging protocol designed for IoT devices that is easy to set up, and easy to process on low grade hardware. It’s not specifically a streaming control protocol, but as it’s bi-directional it can be used for session initiation, and it can also handle related camera functions like advising of sensor changes like motion detection events and sending snapshot still images.

MQTT is relevant here as it can be used to set up sessions for WebRTC streams.

Web Real Time Communications (WebRTC)

Google acquired the underlying technologies for this protocol in 2010 and open-sourced it as part of initiating the standards process. The first working implementation was built in 2011 and reached the stage of being recommended for standardization in 2021. WebRTC has grown to become a de-facto standard for web-based streaming data anyway due to the near universal adoption by web browsers.

What makes WebRTC significant is that it allows for direct streaming of data between browsers with no need for intermediary servers, an ability also applicable to smartphone apps and other connected devices…like cameras. This is also what will most likely be used to rebroadcast an RTSP stream for viewing remotely. Note that WebRTC is not limited to audio visual streams but can handle anything. It’s used by file sharing web sites, for example, to allow people to transfer files over the internet using only their web browsers.

Again, WebRTC does not actually carry the stream data, but sets up and manages the session to do so. If you look at the diagram above, you’ll see that it still sits behind another protocol like SIP or MQTT. This is because WebRTC doesn’t have any discovery capability, it only works between two known end points. As such one of the other messaging protocols is usually used to establish the session first (typically SIP), which then allows WebRTC to deal with the stream setup without the need for extra plugins, apps, or drivers.

In order to standardize streaming capability across browsers, WebRTC defines a required set of codecs that all supporting browsers must be able to handle. As a baseline for video, this means H.264 and VP8, with VP9 as options. For Audio it’s Opus and G.711 with G.722, iLBC and iSAC as options.

Real Time Transport Protocol (RTP)

This is where the rubber meets the road. All the protocols above are about set up and control of a streaming session, but none of them actually carry the video data. That’s where RTP comes in. RTP is a transport protocol, it carries the encoded audio and video data from A to B. Originally published in 1996 along with RTSP, the current standard definition was published in 2003 under RFC 3550.

RTP is a ratified standard and is the most commonly used transport protocol for RTSP, SIP, and WebRTC, although other protocols can be used by specific applications if different capabilities are required.

A companion protocol, RTCP (Real Time Control Protocol) helps with that by providing out-of-band metrics for monitoring things like packet loss, transfer speed, and dynamic video resolution to help gauge the success of the data transfer. Out-of-band simply means that it’s in a different channel separate from the stream data.

As different media streams, such as audio and video, can be sent in different RTP sessions, RTCP helps with synchronize these at the other end and monitor Quality of Service. RTP does not guarantee quality of service, however, it simply provides the data needed for it to be handled elsewhere if required, such as WebRTC for example.

RTP is media format agnostic, which means it doesn’t need to be updated when new formats come along. Instead, each media type is defined by a profile that specifies which codecs are to be used. Various RFCs exist to cover mapping different audio and video types such as MPEG-4 and H.263 to RTP packets.

RTP typically runs over UDP as streaming data can tolerate some loss without significant degradation, and timeliness of delivery is more important. The control protocol may use TCP to maintain the connection and session data, as is the case with RTSP. The header of the RTP media units begin with a header which contains the necessary profile references and formating data:

RTP packet header structure

Each data field is defined as follows:

Version - Protocol version being used.

P (Padding) - Padding may be used to ensure a certain block size. The last byte is number of padding bytes that were added.

X (Extension) - Flags if there is extension header required for some profiles.

CC (CSRC count) - The number of CSRC identifiers (see below)

M (Marker) - If set, the current data has some special relevance for the application.

PT (Payload type) - Indicates the format of the payload as required by the specific profile.

Sequence number - Incremented for each packet used to detect packet loss. This is initially a random value to prevent relay-attacks.

Timestamp - Used by the receiver to play back the received samples at appropriate time and interval. The meaning of this value is dependant on the stream type such as 8KHz for telephony quality audio, or 90kHz for video streams. This is specified in the media profile.

SSRC - Synchronization source identifier uniquely identifies the source of a stream.

CSRC - Contributing source IDs There may be multiple contributing sources to a stream, the number of which is specified in the CC field above. Each one will have its own CSRC field.

Header extension - (optional, presence indicated by Extension field) The first 32-bit word contains a profile-specific identifier (16 bits) and a length specifier (16 bits) that indicates the length of the extension in 32-bit units, excluding the 32 bits of the extension header.

Video Codecs

The data carried by the streaming protocols to a receiver, whether it’s for viewing or recording, needs to be compressed in some way. Raw video data is larger and consumes unnecessary bandwidth that we can’t afford to waste if we want a fast, high-quality feed.

A codec (a portmanteau of ‘coder-decoder’) is a software algorithm that performs this compression in a specific way so that it can be reversed at the other end. This is also known as ‘encoding’. Modern codecs are extremely effective at reducing the size of the data without significant perceptible quality loss.

There are a few codecs in popular use that will be used for streaming in IP camera scenarios. Different codecs may be used for the camera feed itself and the playback from an NVR, depending on the viewing client. Changing a video source from one codec to another is known as ‘transcoding’.

Common video streaming codecs

H.264/AVC - Developed and licensed by ITU and also known as MPEG-4 AVC, H.264 has been around for a while now and has become the defacto standard for internet video applications. Just about every device supports it, so it’s a good fallback position for streaming to arbitrary clients. 50% smaller than MEPG-2 it delivers high quality video, but is becoming dated with higher resolution source data becoming mainstream.

H.265/HEVC - The successor to H.264, this new version delivers twice the quality at the same size and is thus able to handle 4K video well. It’s still not royalty free for use, though, so is being squeezed out of the market by new open-source alternatives.

VP8 / VP9 - Google developed this codec specifically for web streaming, and to avoid royalties. It’s free to use and is the main codec used by Youtube. It’s similar in capabilities to H.265 and handles 4K well. As it’s tailored for large audiences it delivers a more consitent streaming experience, but at slightly lower quality than H.265.

AV1 - Developed by the AOM industry alliance which includes all the major tech players, AVI is designed to replace current streaming protocols by delivering the superior quality of HEVC 30% faster. It’s able to create the highest quality stream at any bandwidth compared to other protocols and has the buy-in of every major web browser, tech company, and hardware manufacturer. It’s still relatively new, though, and new standards are notoriously slow at gaining momentum. Adoption is still fairly small but is well positioned to grow more quickly now.

Note that audio will be encoded using a different set of codecs and is synchronized with the video stream by the playback application.

Connecting to an RTSP camera

NVRs that support RTSP/ONVIF cameras will typically allow you to specify arbitrary settings to connect any compatible camera. The catch with RTSP is the URL required will vary with manufacturer. You should be able to get what you need from the camera documentation, but you might be able to use publicly available information. This is the generic format for an RTSP connection:

rtsp://[username:password]@[ip_address]:[port]/[suffix]username:password - The credentials for accessing the camera, there will be defaults out of the box, but you will have chaged those during setup (right?). Interestingly, some manufacturers don’t require this.

ip_address - The IP address of the camera on your local network.

port - The default is 554, this would only be different if you are connecting to an internet published camera.

suffix - This is the manufacturer specific bit which defines the channel to access, and possibly some other access settings.

Some examples:

rtsp://admin:12345scw@192.168.1.210:554/cam/realmonitor?channel=1&subtype=1

rtsp://admin:12345@192.168.1.210:554/Streaming/Channels/101

rtsp://admin:12345scw@192.168.1.210/unicast/c2/s2/live

rtsp://192.168.1.210:554/LiveChannel/0/media.smp

rtsp://192.168.1.210/h264.sdp

See How to connect Amcrest RTSP cameras to Blue Iris for a practical example. As you’ll see you may need to specify codec information as well, depending on the NVR you are using. H.264/MPEG-4 will be the most common for security cameras.

Summary

IP Cameras, including many Wi-Fi models, can be used not only with hardware DVRs, but a variety of streaming software and services. Using these tools will require some degree of protocol knowledge, at least so you understand what the interface is talking about.

Streaming video from these cameras will usually use the RTP protocol to carry the encoded video, and at this stage cameras primarily use H.264 for encoding. The RTP payload can be controlled by several protocols, with RTSP or SIP being the norm for the camera to NVR connection. RTSP for local NVRs, and SIP for cloud services. Playback or rebroadcasting from the NVR may be handled by WebRTC as it allows for broad cross platform access in web pages and smart phone apps.